Ted Chiang wrote the story that inspired the movie Arrival. In another story “The Merchant and the Alchemist’s Gate” he imagines a door that takes people 20 years into the future, or 20 years into the past. The story revisits the following classic paradox: Can a time-traveler from the future influence what we do? If yes, the future the traveler came from can be changed. But if even simple choices are fixed, say your choice to visit a park this Sunday, then do we have free will? Is the future immutable?

The theoretical physicist William Newcomb created a puzzle to test our thinking about such questions. Newcomb envisioned a being, say an alien, that can tell the future. The alien comes to Earth carrying two boxes – box A and box B – and places the two boxes in front of you. You can see that box B holds 1000 dollars. You can’t see inside box A. The alien says: “You have two choices, you can open only box A, and take what is there, or you can open both boxes, and take what is in both. But know that I can tell the future: If I have foreseen that you will only choose box A, then I have placed a million dollars in it. If I have foreseen that you will open both boxes, then I have placed nothing in box A, and you will only get the 1000 dollars in box B.” What will you do?

You may be thinking that the answer is obvious. However, people don’t seem to agree what that obvious answer is! Even professional philosophers have been at loggerheads over this problem. Some reason “The alien knows what I am going to do. Therefore, I should open only box A because knowing that I will choose to open only box A, the alien has placed a million dollars in it. If I were to open both boxes, the alien would have predicted my decision. It would have left box A empty, leaving me only with the 1000 dollars in box B”. Others claim this is nonsense and say: “When the two boxes are placed in front of me box A either contains a million dollars, or it doesn’t, and no one can change that. In either case I am better off choosing both boxes, since I will always end up with a $1000 dollars more than if I just open box A!”

Newcomb’s paradox seems contrived – not many of us have met all-knowing aliens. However, many people think they are good judges of character and claim they can predict what others will do. They are like the alien in the story: Suppose you meet such a person, and ask them to borrow 10 dollars which you will repay with interest. Now, will this good judge of character predict whether you intend to honor your promise, and give you the money only if you are trustworthy? Or are you completely free to choose whether to repay it or not once you have it, making predictions worthless.

We all constantly switch between the two sides in Newcomb’s paradox: Like the alien, we forecast the future, and trust our predictions will be borne out. But at the same time, we want our choices to be free, and not preordained and predictable.

The paradox was first published by Robert Nozick , although it was formulated by William Newcomb. A lot has been written about Newcomb’s paradox: Here is a clear explanation of the paradox, and here is a good video.

There are many variants of the paradox, including the more relatable Parfit’s Hitchiker ,and the more abstract meta-Newcomb paradox. A mathematical treatment that makes it a bit clearer what is going on can be found here. While this explanation is mathematically correct, I am not completely convinced that it resolves all questions.

As we learn in elementary school, DNA is the blueprint of life. It guides our growth, our deterioration, and everything in between. Cells translate information encoded in DNA into proteins, and proteins provide structure to our bodies, and guide the chemical processes that are life.

The information encoded in DNA is translated into aminoacid chains. These aminoacid chains are like self-assembling origami – once created, they fold themselves into the right shape. And each chain becomes a properly functioning protein only after folding itself into the right structure. We understand well how DNA determines what an aminoacid chain is made of. But we know far less about how DNA determines the final shape of a protein.

Think of a long piece of pipe cleaner, say a foot long. Now imagine that at every inch between the tip and the end you are free to bend the cleaner at any angle, and in any direction you choose. It is easy to see that the number of possible shapes you can get after all these bends is astronomical. Proteins are similar: There is such a large number of possible ways to fold an aminoacid chain that it is very difficult to predict the actual form that a protein assumes just from knowing what it is made of.

Yet protein shape is extremely important. When folded correctly, proteins can recognize hostile bacteria, generate the power in our cells, and make fertilizer out of the nitrogen in our air. Misfolded proteins, on the other hand, lead to disease like Alzheimer’s and cystic fibrosis. Some misfolded proteins can even cause other proteins to misfold resulting in Mad Cow Disease.

The number of possible shapes for even small aminoacid chains is huge. We cannot explore all possibilities even with the most powerful computers. Here biophysicists and mathematicians have made great progress over the years by showing how the folding process can be broken into smaller steps easier for computers to tackle. But the problem remains far from solved, and scientists pit their programs against each other in various competitions to most accurately predict protein shapes from DNA sequences.

Surprisingly, the same machine learning team that has created the computer program that defeated the world champion in the game Go, is also a recent protein folding competition winner. Machine learning methods work best when computers can be trained on many examples. Over the last eight decades, scientists have been able to use a variety of methods to determine the structure of many proteins. This information is now used to train machines to make predictions that exceed the accuracy of anything we have seen before.

Understanding how proteins fold gives us insights into how our bodies work, and why they fail to do so. It may also allow us to design novel proteins that have never appeared in nature before. These new structures could break down pollutants, allow for green chemical and fuel production, or help us heal.Yet it is only with the help of machines that we will finally crack this fundamental question of how life takes shape.

Additional notes:

Thanks to Prof. Joff Silberg in the Department of BioSciences at Rice Unviersity for a number of helpful suggestions. John Lienhard

- This article from DeepMind describes the original research

- The protein folding problem has different facets that I did not get a chance to explore here. Here is a good overview of the problem.

- Here is a report on the competition won by DeepMind.

- The protein folding problem, and protein design has also been crowdsourced. You can find out more about this here

This was written with my University of Houston colleague, Vaughn Climenhaga, for Engines of our Ingenuity:

The international mathematics community was recently shaken by news of the death of Maryam Mirzakhani, one of the foremost mathematicians of her generation. Although she died much too young at the age of 40, she had already achieved more than most mathematicians do in a lifetime.

Mirzakhani grew up in Iran. Although initially not very interested in mathematics, by the age of 17 she had already won the gold medal in the International Mathematics Olympiad. After completing her undergraduate degree at Sharif University, she came to the US to work on her PhD at Harvard.

Mathematics has a long history. As a result many problems have been mulled over by scores of people over hundreds of years. This makes it quite daunting for students trying to make their first original contributions. However, Mirzakhani was fearless, and tackled some of the hardest problems in mathematics early on. Her tenacity is legendary, and she worked on problems over years.

Mirzakhani did remarkable work in different areas of mathematics. Some of her most influential work studied paths called “geodesics”. Imagine an ant walking along a surface. If the ant moves straight ahead without turning left or right, it follows a a geodesic. On a flat surface, the ant walks in a straight line. If the surface is curved, the ant’s path curves with it. On a sphere, for example, the ant walks along a “great circle” which gives the shortest path between two points on the sphere’s surface; at a larger scale, these great circles determine the routes flown by airplanes on long journeys.

On other surfaces, geodesics can be more complicated. An ant walking along a geodesic on a donut can wind around the donut’s surface in a complicated way. It may eventually come back to where it started and continue traveling along the same path it just traversed. Or it may wander forever, coming close to, but never quite hitting the point from which it started its journey. Modern physics tells us that space itself is curved. Thus geodesics are also fundamental to understanding the universe we live in.

Mirzakhani studied geodesics using the mathematical theory of “moduli spaces”. This is a very powerful, but difficult, theory that provides insights not just into geodesics and geometry, but also into many other areas of mathematics and physics ranging from number theory to quantum gravity.

In 2014, Mirzakhani was awarded the Fields Medal, the highest honor in mathematics. She was the first woman to win this award. But for her colleagues in the mathematics community, her legacy will be the beautiful mathematics she produced, as well as her incredible tenacity, optimism, humility, and vision.

Notes:

Numerous articles have been written about Maryam Mirzakhani before and after her death. Here is a good article about her life and her contributions. Here is another one focusing on her research.

To date 76 people have been awarded the Nobel Memorial Prize in Economic Sciences (or more accurately the Swedish National Bank’s Prize in Economic Sciences in Memory of Alfred Nobel). Only one of these awardees was a woman. Elinor Ostrom received the prize for showing how people can manage environmental resources often without governmental help. However, as a young woman her application to a graduate program in economics was rejected. She had been discouraged from taking math classes as a girl, and this lack of preparation lead to the rejection. She completed a PhD in political science instead, and became a legend in both fields.

Things worked out well for Professor Ostrom. But how many contributions from brilliant women have been lost or remain unacknowledged? Why are there relatively few women in fields such as physics, mathematics and engineering? There is no simple answer. However, research indicates that our expectations and assumptions may play an important role.

Think of someone who has great intuition, and flashes of amazing insights. Perhaps you thought of Sherlock Holmes, agent Mulder from the X-files, or a scientist like Albert Einstein. Whoever came to mind, it was likely a man. Indeed, genius, and innate aptitude are most often associated with males. For instance, there are nearly three Google searches for “Is my son gifted?” for every such search involving a daughter.

Sarah-Jane Leslie, Andrei Cimpian and their colleagues decided to see whether such expectations are related to the number of women in different disciplines. They noted that in certain fields of science and the humanities people associate success strongly with raw aptitude. We expect that innate ability is essential for success in mathematics, economics, music composition, and philosophy. The researchers found that the stronger this belief in the need for brilliance in a discipline, the smaller the proportion of women working in it.

It could be that women are discouraged from entering these fields, because they are not viewed as naturally brilliant as men. A less likely explanation is an actual difference in natural aptitude. Although this is difficult to assess, much research suggests that natural differences between the sexes are not the cause. Moreover, the number of women in technical fields has been increasing for some time. A shift in our expectations is a more likely explanation than a sudden increase in the number of brilliant women that are born.

Few have the ability and motivation to become truly outstanding doctors, engineers and scientists, but we all benefit from their work. Recently the same group of researchers found that stereotypes about brilliance affect girls as young as six. Young girls associate hard work and good performance in school with their gender, but believe that boys are smart. This suggests a sobering conclusion: If we don’t want to miss the next female Nobel Prize winner, we need to encourage girls earlier than we thought. Not in college, but in kindergarden.

References and notes:

Thanks to Andrei Ciprian and Weiji Ma for helpful comments and suggestions.

You can find the original research here. A review of the findings can be found here. The report is not without controversy, as you can see in the technical comment that followed it (right side of article). Research that shows that stereotypes about brilliance affect even girls as young as six has just been reported here. The authors also provided a nice overview of the research in the NYT, and Ed Yong has written about it in the Atlantic.

The list of Nobel Prizes awarded to women can be found here. The recent movie “Hidden Figures” provides a good examples of extraordinary contributions by brilliant women that have remained unacknowledged for a long time.

In a memorable scenes from the movie “The Princess Bride” the Dread Pirate Robert challenges the Sicilian bandit Vizzini to a battle of wits. The pirate puts a cup of wine in front of himself and one in front of Vizzini. He then asks the little Sicilian to guess which cup is poisoned. Vizzini’s answers by taking into account not only what he knows, but also what he knows that the Dread Pirate Robert knows that he knows, and so on. Unfortunately Vizzini, lacks a crucial piece of information, and suffers one of the most understated ends in movie history.

This exchange between the bandit and the pirate relies on ideas about how we reason about the world, and about each other – features of our thinking that are so ingrained we often take them for granted. First is our theory of mind: Our belief that other humans have thoughts, desires, and beliefs like we do. While our thoughts are not the same, I assume that you have an inner experience that is similar to mine. I can understand your motives, intents and feelings. We take this theory of mind for granted. Imagine thinking that other people have no desires or thoughts, that you are surrounded by robots with no inner life. Such mental blindness is a feature of some psychiatric disorders, and can be devastating.

Another idea takes us a step further: We say that something is common knowledge if we both know about it, and you know that I know about it, you know that I know that you know, and so on. Say two children see that I have hidden a cookie in a jar. If they both just know the cookie is there, they might wait until the other leaves to have the cookie for themselves. But if both know that the other knows about the cookie, they might both rush to get it before the other does.

When negotiating over a car, or a house we try to understand what the other person knows, and maybe what they know that we know. A result of the mathematician Robert Aumann may come as a surprise: Aumann won the Nobel Prize in Economics for his work on conflict and cooperation. In his work he gave a mathematical definition of common knowledge. Surprisingly, he then showed that two rational beings who start with the same information cannot disagree about anything that is common knowledge. Say you offer to sell me stock at $10 a share. That price can mean you have information that I don’t. I can therefore update my belief about what the stock is worth, and suggest a price I think is fair. Aumann’s result says that after this negotiation ends, we should both believe the same price is fair.

Mathematics therefore says that we can’t agree to disagree if we are rational. So why do we disagree about so many things. Perhaps we are never completely rational. Or we can never get to the bottom of knowing about knowing about knowing. But we do have a theory of mind – the gift of gleaning what it is to be in another’s skin. This gift will not erase discord, but we can use it to lessen it.

References:

In writing this I used some ideas from Scott Aaronson’s post on the subject. The link leads to a transcript of his lecture on the subject. I highly recommend reading other posts in this blog. Prof. Aaronson is a deep thinker, and gifted writer.

For a related example (also mentioned in the lecture above), consider the Muddy Children puzzle, or the more dramatic Blue Eyed Islander Puzzle. The second link leads to Terry Tao’s blog where he writes in more depth about the subject.

There is evidence that some animals have a theory of mind. However, since it is impossible to know what animals are thinking, the extent to which this is the case is unclear. Here is an interesting recent paper on theory of mind and crows.

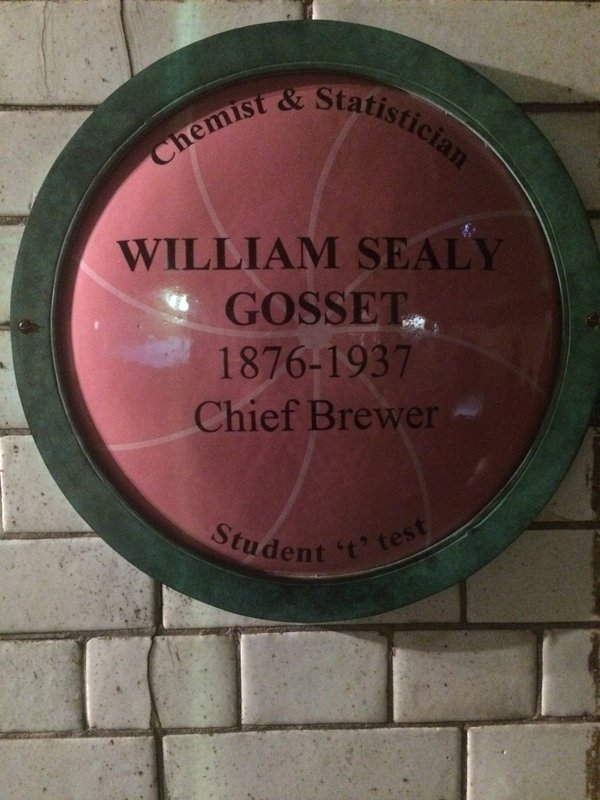

On a recent visit to the Guinness brewery in Dublin I almost walked past a small plaque dedicated to the chief brewer and statistician William Sealy Gosset. Anyone who has taken even a high-school statistics course has heard of Student’s T-test. But not many know that the eponymous “Student” was this brewer from Dublin.

Gosset was hired by the Guinness brewery straight out of Oxford. From the start his theoretical work was motivated by the practical problems of brewing. In the beginning of the 20th century the annual production at Guinness was ramping up towards a billion pints. At such volumes brewers could not inspect every batch of hops and malt extract. Yet, making a product of uniform quality was essential to keeping customers happy. How then to make sure that a big batch of hops was good enough from a few samples? How many measurements of malt extract were needed to ensure that the resulting beer would have the desired alcohol content?

Gosset realized quickly that answering these questions required a mathematical approach. However, existing methods did not apply to the problems he was facing. Chemists and brewers wanted to keep the number of measurements and experiments low to save money and time, while statistical methods of the day applied to large sample numbers. To develop a new approach Gosset spent a year in London at the laboratory of Karl Pearson, the foremost statistician of that time. The result was the Student’s T-test which can be used even with small sample sizes.

But why wasn’t the test named after Gosset himself? The problem was that employees of the Guiness company had revealed trade secrets in earlier scientific publications. After some discussion, Gosset was only allowed to publish his results under a pseudonym. As the publications never mentioned brewing or chemistry, it was unlikely that competing breweries would have paid attention. The modest Gosset didn’t seem to mind. He published all but two manuscripts during his life under the pseudonym “Student”.

Gosset stayed with the Guiness company for the rest of his life, ending his career as Head Brewer of a London branch. However, he was not a scientific recluse. He kept a lively correspondence with the best statisticians of the time, and helped to develop the foundations of statistics as we know it today. He had virtually no enemies, even in that time of acrimonious disputes between outsized personalities in the field. His contemporaries described him as a generous man without a “jealous bone in his body”.

Even a century ago people spoke of “lies, damn lies, and statistics”. And now, as then, statistics is frequently used to score political points and sway public opinion. But we use the work of Gosset and other statisticians today in areas too numerous to list — not just the brewing of beer! We rely on their ideas to see whether new drugs work, and to find planets around distant stars. And the quality control methods that Gosset pioneered are essential for large-scale industrial production. His mathematical ideas help us feed and clothe the world of today.

References:

A shorty biography of W. S. Gosset. A somewhat longer version is here, with good references. Another short one. About Gosset as a pioneer of industrial quality control.

I recommend the work of Stephe Ziliak for an in-depth discussion of W. Gosset’s contributions to the development of statistics, his discussions with R.A. Fisher and other statisticians of the day, and his opinions about interpreting statistical tests. Here is a good example.

We would all like our phone communications to be secure. To ensure that eavesdroppers cannot listen in easily, most of our digital conversations are encrypted. Encryption goes back millenia – when Julius Cesar wanted to write a secret message to, say, Brutus he would replace every letter with one that appears a number of places down in the alphabet. When shifting by 5 positions, he would replace an A by an F, a B by a G, and so on. An interceptor would see a garbled note. But Brutus would shift each letter five spaces back in the alphabet and recover the original message.

Since Cesar’s time ciphers have become far more sophisticated. But until the 1970s, they had a serious problem. The sender and recipient of a message had to share the key to encrypt and decrypt the message. For example, Cesar and Brutus both knew to shift each letter in the alphabet by five spaces. They either had to agree to this in advance, or had to communicate this key in secret. If the key was intercepted, communications were not secure.

Now imagine a bank that wants to receive sensitive information from its customers. Sharing a separate key with millions of clients would be cumbersome, and storing all these keys safely would be difficult. But what if customers were all given a single key to encrypt their messages, but only the bank had the key to decrypt them. In such asymmetric cryptography, the key that locks the message is different from the key that unlocks it.

Such asymmetric encryption schemes may seem impossible. However, mathematicians have shown that they can be used in cryptography. The main idea is to find an operation that is easier to perform than it is to undo. For example, mixing oil and vinegar in a dish is easy. However, trying to get the oil out of the mixture is far harder.

There is a mathematical equivalent of this idea – the bank can give all its customers the same number, and they all use the same recipe to scramble their messages. However, only the bank keeps the number that can be used to unscramble the messages – think of it as a secret ingredient able to remove all the vinegar out of a mixture and recover only the oil. Without this secret ingredient, anybody wanting to separate the mixture would have to do it droplet by droplet.

The mathematicians Ron Rivest, Adi Shamir and Leonard Adleman were the first to describe a practical way to do this. They used ideas from number theory – one of the most abstract fields of mathematics. Similar schemes are now used widely. Indeed, as in the original scheme, the secrecy of our messages often relies on the fact that nobody knows how to quickly find two prime factors of a large number that is the product of the two.

If you have bought something online, or accessed your bank account over the internet you have used some version of such asymmetric encryption. Abstract mathematical ideas developed centuries ago have kept your interactions safe. But sophisticated ideas are also being used to break these schemes. And mathematics and computers remain the main weapons in this centuries long war between code makers and code breakers.

Notes:

RSA encryption was first proposed by Ron Rivest, Adi Shamir and Leonard Adleman publicly in 1978. However, it was discovered in 1974 by the English mathematician Clifford Cocks while working for a UK intelligence agency. This early discovery remained classified until 1997.

An excellent video explaining RSA encryption is available here

Even the best encryption schemes can be vulnerable to attacks unless implemented well.

With so much of our lives (both on and off line) being recorded and documented, secure encryption is more important than ever. Indeed, encryption has been in the news recently: The FBI requested that Apple decrypt an iPhone used by one of the attackers in the December, 2015 mass killing in Bernardino, CA. The FBI reportedly decrypted the phone without help from Apple. The person behind the Panama Paper leak communicated only through encrypted channels, and remains anonymous. But encryption is essential even if you don’t do anything dangerous or illegal.

A recent lecture by Prof. Alan Huckleberry made me revisit this old essay by Eugene Wigner, and prepare an episode for Engines about it:

The 16th century astronomer Galileo Galilei claimed that the language of nature is mathematics. As far as we can see, Galileo was right. But why is math the language of nature? And why is that language understandable to us?

These questions puzzled the physicist Eugene Wigner. Wigner and other scientists who were part of the revolution that brought us quantum mechanics and relativity, used math to express the laws of nature. To their surprise, they found that mathematicians had already invented the language they needed. They’d developed this language without thinking of whether or how their ideas could be applied.

This made Wigner ask why math is so unreasonably effective in describing nature. Much is hidden behind this question: First, why do general laws of nature exist? Even if we assume that without such laws all would be chaos, it is still a wonder that we can discover and understand them. But let us accept that that the nature is humanly comprehensible.

What still puzzled Wigner is that we have a language of our own making ready at hand to describe the world around us. The words, phrases and ideas of mathematics we need to talk about the laws of nature are often available when we need them. In math ideas are developed because they naturally flow from previous theories, and because mathematicians find them beautiful. It is then somewhat of a miracle that some of these ideas can be applied not just in physics, but in most other sciences.

Some have argued that math is not as useful as it seems. Maybe we focus too much on problems where math happened to be of great help. In fields like medicine, economics, and the social sciences general laws have been harder to come by. For example, try to find a short list of rules for the English language. Many have tried and failed.

Some data scientists have therefore argued that we need to embrace the complexity of such systems. We should forget the elegance of math for more pragmatic approaches, and let the data guide us. Yet in practice most of these statistical and machine learning approaches still rely on math.

Mathematics may not be able to unlock all mysteries. But it is still very useful – it lets us describe and understand things infinitesimally small, unimaginably large, and events far in the past and the future. Math gives us a glimpse into realms that we can’t directly experience, and where our intuitions are of no use.

Wigner concludes by saying that mathematics is a wonderful gift which we neither understand, nor deserve. He expresses a hope that it will continue to be useful to our continued surprise. I do believe that we will increasingly rely on computers to help us make sense of the world around us. Yet, I am sure that math will remain the language we will keep using in this conversation.

Notes:

Wigner’s original essay can be found here. Although over 50 years old, it asks questions that we are not much closer to answering. There have been many follow-ups to Wigner’s essay. I have drawn from the ideas of the mathematician R. W. Hamming, and the engineer Derek Abbott.

The precise quote from Galileo “[The universe] cannot be read until we have learnt the language and become familiar with the characters in which it is written. It is written in mathematical language, and the letters are triangles, circles and other geometrical figures, without which means it is humanly impossible to comprehend a single word.” More quotes here

I thank Prof. Alan Huckleberry for emphasizing that there is a natural flow of ideas and developments in mathematics that become apparent in retrospect. Yet, I would like to add that there is also a cultural component to these developments. It may thus be better to view the co-development of physics and mathematics as part of a larger cultural evolution of ideas. Perhaps in this larger context the relation between mathematics and physics is more natural. I leave this discussion to historians of science.

Science fiction writers imagine that our descendants will use wormholes to travel instantaneously across galaxies without using much energy. We do not yet know how to cross vast distances instantaneously. However, using celestial mechanics – the mathematics that describes the motion of celestial bodies – astrophysicists have found ways of traversing space using very little fuel.

To understand how this is possible, think first of the Earth and the Moon. Each exerts a gravitational pull on objects, while they spin around each other. Connect the centers of the Earth and the Moon with an imaginary line. Somewhere along this line, the pull of the Earth equals that of the Moon, but in the opposite direction. Placing a spacecraft at this point is like placing it on a knife’s edge: A small nudge will break the balance of forces and send it drifting towards the Earth or the Moon. This position is called a “libration point”. The mathematicians Leonhard Euler and Joseph-Louis Lagrange showed that there are five libration points in a system of two rotating bodies such as the Earth and the Moon. At these points the combined gravitational forces of the two celestial bodies give exactly the right pulls to keep an object orbiting in an unchanging position.

Two of these libration points are stable – satellites placed at these points will remain there forever. Indeed, many asteroids can be found at the two stable libration points of the Sun-Jupiter system. These are known as the Trojan asteroids: One libration point is home to the Greek camp, while the other houses the Trojan camp.

However, the remaining three libration points are unstable, and these are key to low energy space travel. Imagine placing a marble exactly at the ridge of a saddle. The marble will roll down the ridge towards the saddle’s center, but a small nudge can make it veer down either side. The unstable libration points are similar to the center of the saddle – a spacecraft at the right position going at the right velocity travels along something like the saddle’s ridge approaching the libration point. A small nudge can make it veer down different paths on either side of the saddle. If this is done just right, the paths leading away from the ridge can take the spacecraft along just the orbit we want it to follow.

Designing a low energy orbit is like rolling a marble along saddle ridges, and providing the right nudges at the right time to leave the ridge along the desired exit trajectory. However, in the Solar System everything is in motion, so the saddles and the ridges keep spinning and swerving through space! Essential to mission design are good mathematical models of the solar system, and the computational tools to solve these models accurately.

Traversing the resulting trajectories can take a long time. However, their benefits can be great. The spacecraft Genesis traveled along such a trajectory to sample the solar wind while using only a fraction of the fuel expended by similar spacecraft in the past.

For thousands of years humans have tried to understand the motion of the planets. The language of mathematics can lead to such an understanding. But mathematics has also provided much more – it has told us how to traverse the Solar system, and may some day lead us far beyond.

Notes:

I am grateful to independent astrodynamics consultant Daniel Adamo, retired NASA planetary scientist Wendell Mendell, and astronaut Michael Barratt for their help in preparation of this episode.

I simplified the explanation of the trajectories between libration points here. They are in fact not straight lines, but take the form of spirals on the surface of tubes. These tubes approach closed orbits that surround the libration points. We are accustomed to the closed orbits of planets around the Sun (or any object that has mass) described by Kepler’s Laws. But libration points have no mass. It may therefore be surprising that we have closed orbits around points of no mass! A discussion of the mathematics of interplanetary travel along the orbits I discussed can be found here. A number of further references, including more technical ones are provided in this article. Note that the feasibility of interplanetary missions using the same principles remains controversial.

More about Lagrangian (or Lagrange) points can be found here. The Wikipedia page also has an intuitive explanation of why they appear, and it also has a nice picture of the Jupiter Trojans.

Libration points are sometimes referred to as Lagrange points. Joseph-Louis Lagrange was an interesting figure himself. Read the entry for an overview of his rich life that spanned the French Revolution.

This is a book review for the applied math journal SIAM Review. Comments are welcome. An short version of the book can be found here.

Most of us accept that statistics is not applied mathematics. The goal of statistics is to obtain answers from data, and mathematics just happens to be an exceptionally useful tool for doing so. However, for many applied mathematicians

statistical analysis is an integral part of daily work. Experimental studies often motivate our research, and we use data to develop and validate our models. To understand how experimental outcomes are interpreted, and to communicate with scientists in other fields, a knowledge of statistics is indispensable.

One issue that we need to take seriously is that misapplications of statistics have lead to false conclusions in much, maybe even most published studies [1]. Although the “soft sciences” have received most scrutiny in this regard [3], the “hard sciences” suffer from closely related problems [2]. Anybody who uses the results of statistical data analysis – and this includes most applied mathematicians – needs to be aware of these issues.

As the title suggests, Alex Reinhart’s “Statistics Done Wrong” [4] is not a textbook. Rather, its aim is to explain some of

the ways in which data analysis can, and often does go wrong. The book is related to Darrel Huff’s classic “How to Lie with Statistics” [5] which covers many topics that are now part of freshman courses. Huff, a journalist (and later consultant to the tobacco industry) provided a lively discussion that alerted general readers to the misuses of statistics by the media and politicians. Since the first edition of Huff’s book in 1954 computational power has increased immensely. But our increased ability to collect and analyze data has also made it easier to misapply statistics. Reinhart’s book aims to introduce the present consumers of statistics to the resulting problems, and suggests ways to avoid them.

The book starts with a review of hypothesis testing, p-values and statistical power. Here Reinhart introduces a recurrent topic of the book: the errors and “truth inflation” due to the preference of most journals and scientists to publish positive results. The ease with which we can analyze data makes the problems of multiple comparisons and double dipping particularly important. The book provides a number of thoughtful examples to illustrate these issues. The last few chapters provide good guides to data sharing, publication, and reproducibility in research. Each chapter ends with a list of useful tips.

Most of these issues are more subtle than those discussed by Huff [5]. While not heavy on math, the book presents arguments that require reflection. The ideas are frequently illustrated using well chosen examples, making for an entertaining read. The book is thus informative, yet easy to read.

Reinhart predominantly discusses issues resulting from the misuse of frequentist statistics. This is understandable, as the frequentist approach is currently dominant in most sciences. However, it is worth noting that Bayesian approaches make it easier to deal with some of the main problems discussed in the book. Bayesian statistics makes it easier to deal with multiple comparisons, and replaces p-values with measures that are easier to interpret. However, it is not a magic bullet – as Bayesian approaches become more common over the next decades, we may need another volume describing their misuses.

What is the audience for this book? Many of the topics need to be familiar to anybody doing science today. The book could also provide good supplementary material for a second course in statistics.

Doing statistics can be tricky. Finding the right experimental design requires a careful consideration of the question to be answered. The interpretation of the results requires a good understanding of the methods that are used. All statistical models are by necessity approximate. Knowing how to verify that the underlying assumptions are reasonable, and choosing an appropriate way to analyze data is essential. A central point here is that the statistical analysis deserves as much

attention as the conclusions we draw from it. And perhaps the most important lessons of this book is that questions of statistical analysis should be addressed when the research is planned.

Reinhart’s book is not a comprehensive list of the different ways in which misuses of statistics can lead us astray. It provides no foolproof answers on how to detect problems in statistical analysis. However, it does an excellent job of introducing a range of common pitfalls, and provides sensible tips on hows to avoid them. Doing statistics means accepting that we will be wrong some of the time. The best we can do is to maximize our chances of being right, and understand how likely it is that we are not.

References:

- Ioannidis, J. P. A. Why most published research findings are false. PLoS Medicine, 2:8, (2005) e124. http://doi.org/10.1371/journal.pmed.0020124

- Button, K. S., et al. Power failure: why small sample size undermines the reliability of neuro- science, Nat Neuroscience, 14, p. 365-376 112. (2013) http://doi.org/10.1038/nrn3475

- Open Science Collaboration. Estimating the reproducibility of psychological science. Science 349:6251,p. 943. (2015) http://doi.org/10.1126/science.aac4716

- Reinhart, A. Statistics done wrong. No Starch Press (2014).

- Huff, D. How to Lie with Statistics. Norton, W. W. & Company, Inc. (1954).